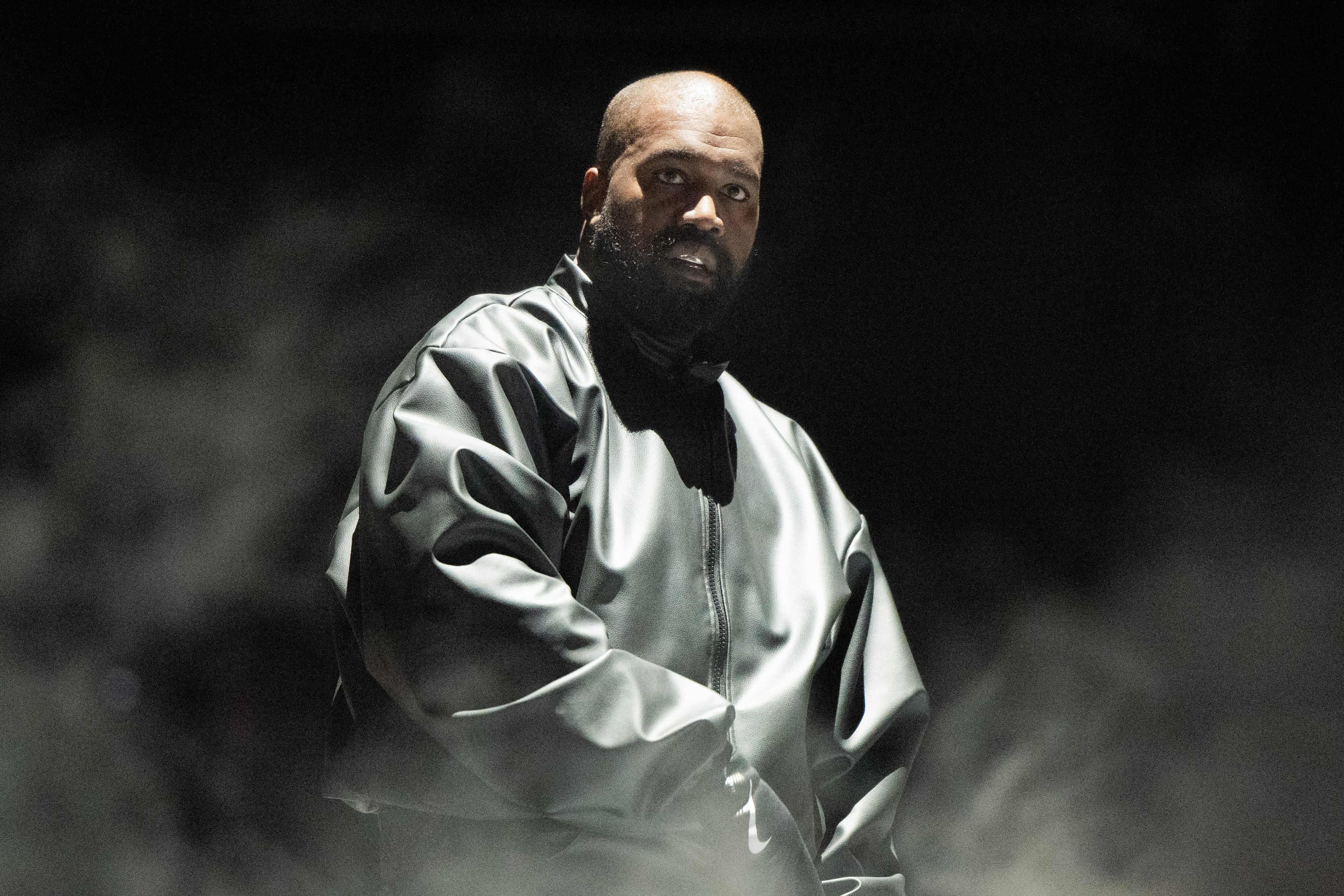

Kanye West's Dental Nightmare: Laughing Gas Addiction Lawsuit

Kanye West's Dental Drama: Laughing Gas Addiction Lawsuit!

Introduction: A Painful Revelation?

Hold on to your hats, folks! The Kanye West saga takes another wild turn, and this time, it involves a dentist, alleged addiction, and a whole lot of laughing gas. Is this the plot of a bizarre comedy or a serious legal battle? It appears to be the latter. Kanye West, along with his wife Bianca Censori, are reportedly gearing up to sue Dr. Thomas Connelly, claiming he's responsible for West's alleged dependence on nitrous oxide, aka "laughing gas."

West and Censori's Legal Offensive

Apparently, West and Censori are presenting a united front against Dr. Connelly. They've reportedly sent a formal notice of intent to sue, a signal that they're serious about pursuing legal action. But what exactly are the accusations?

Accusations of Malpractice

According to the notice, obtained by E! News, West and Censori's lawyers from Golden Law are accusing Dr. Connelly of malpractice. The core of the complaint? They claim he supplied West with "copious amounts" of nitrous oxide during cosmetic dental treatments between 2024 and 2025. Sounds like more than just a quick teeth whitening session!

Left to "Fend for Himself"

The lawsuit alleges that West was left to "fend for himself with a serious induced addiction" after the dentist's alleged over-administration of laughing gas. Can you imagine going in for a dental procedure and coming out with a dependency? It's a serious accusation that could have significant consequences.

Nitrous Oxide: More Than Just Giggles?

Nitrous oxide is often used in dental procedures for its pain-relieving and anxiety-reducing effects. But is it harmless? Let's delve a little deeper into what laughing gas is all about.

The Euphoric Effects of Laughing Gas

Nitrous oxide, when inhaled, produces a sense of euphoria and relaxation. That's why it's commonly referred to as "laughing gas." It can also reduce pain perception, making it a valuable tool for dentists. But anything that makes you feel *that* good is bound to have some potential downsides, right?

The Risks of Overuse

While generally considered safe when administered properly by a trained professional, overuse or abuse of nitrous oxide can lead to serious health problems. These can include neurological damage, vitamin B12 deficiency, and even addiction. So, it's not just giggles and grins – it's a serious substance that needs to be handled with care.

Connelly's Alleged Actions: A Deep Dive

The lawsuit claims Dr. Connelly's actions went far beyond what was medically necessary. What are the specific allegations against him?

"No Legitimate Dental or Medical Justification"

West and Censori's lawyers allege that Dr. Connelly administered nitrous oxide in quantities and frequencies that had "no legitimate dental or medical justification." That's a pretty strong statement. Were there other options available? Why was such a high dose used?

Sedative Substances and Concerns

The notice also mentions "other sedative substances," raising further questions about the types of medications West was allegedly given. Was this a case of unnecessary over-medication?

The "Notice of Intent to Sue": What Does It Mean?

A "notice of intent to sue" is a formal notification that someone plans to file a lawsuit. It's often a required step before a lawsuit can actually be filed, giving the other party a chance to respond and potentially resolve the issue without going to court. So, what happens next?

A Chance for Resolution?

The notice gives Dr. Connelly the opportunity to respond to the allegations and potentially negotiate a settlement. Will he fight back, or try to settle out of court?

Preparing for a Legal Battle

If Dr. Connelly doesn't respond or if the parties can't reach an agreement, West and Censori will likely proceed with filing a formal lawsuit. This could lead to a lengthy and potentially messy legal battle.

Cosmetic Dentistry: A Double-Edged Sword?

The case shines a spotlight on the world of cosmetic dentistry. While many people seek cosmetic procedures to improve their appearance and confidence, there are potential risks involved. Is the pursuit of the perfect smile worth the risk of addiction or other health problems?

The Pressure for Perfection

In today's image-obsessed society, there's immense pressure to look a certain way. Cosmetic dentistry can offer solutions for people who are unhappy with their teeth, but it's important to weigh the benefits against the potential risks and costs.

The Importance of Informed Consent

Patients undergoing cosmetic dental procedures need to be fully informed about the risks and benefits of any treatments, including the potential for side effects and complications. Informed consent is crucial to ensure patients make the best decisions for their health.

The Broader Implications

This case could have broader implications for the dental industry, potentially leading to increased scrutiny of the use of nitrous oxide and other sedative medications. Will this case change how dentists administer laughing gas?

Increased Regulation?

If the allegations against Dr. Connelly are proven true, it could prompt calls for stricter regulation of the use of nitrous oxide in dental practices. This could include limiting the amount of gas that can be administered or requiring dentists to undergo additional training.

A Wake-Up Call for Patients

The case may also serve as a wake-up call for patients, encouraging them to be more proactive in their own healthcare and to ask questions about the medications they're being given. Patients need to feel empowered to speak up if they have concerns or questions about their treatment.

Conclusion: Waiting for the Verdict

The Kanye West-Dr. Connelly saga is far from over. Whether it ends in a settlement or a full-blown trial, the case has already raised important questions about the use of nitrous oxide in dentistry and the potential for addiction. Only time will tell what the final outcome will be, but one thing is certain: this is a story that will continue to capture our attention. Will the truth come to light, or will this case become another footnote in the ever-evolving Kanye West narrative?

Frequently Asked Questions

- What is nitrous oxide and how is it used in dentistry?

Nitrous oxide, also known as "laughing gas," is a sedative gas used in dentistry to help patients relax and reduce pain during procedures. It's inhaled through a mask and wears off quickly after the procedure is complete. - What are the potential risks of nitrous oxide?

While generally safe when administered properly, nitrous oxide can cause side effects like nausea, vomiting, and dizziness. Long-term or excessive use can lead to more serious complications, including vitamin B12 deficiency and neurological damage. - What is a "notice of intent to sue"?

A "notice of intent to sue" is a formal notification that someone plans to file a lawsuit. It gives the other party a chance to respond, investigate the claims, and potentially resolve the issue without going to court. - What is malpractice in the context of this case?

In this case, malpractice would refer to Dr. Connelly allegedly providing excessive amounts of nitrous oxide to Kanye West without a legitimate medical justification, leading to addiction and other potential health problems. - What should patients do if they are concerned about their dental treatment?

If you have any concerns about your dental treatment, it's important to speak up and ask questions. Get a second opinion if you're not comfortable with the care you're receiving, and make sure you understand the risks and benefits of any procedures or medications.