Ye's "Heil Hitler" Song: Millions See Hate Speech on X

Ye's "Heil Hitler" Song: Millions View Hate Speech on X

Introduction: The Digital Wild West of Hate Speech

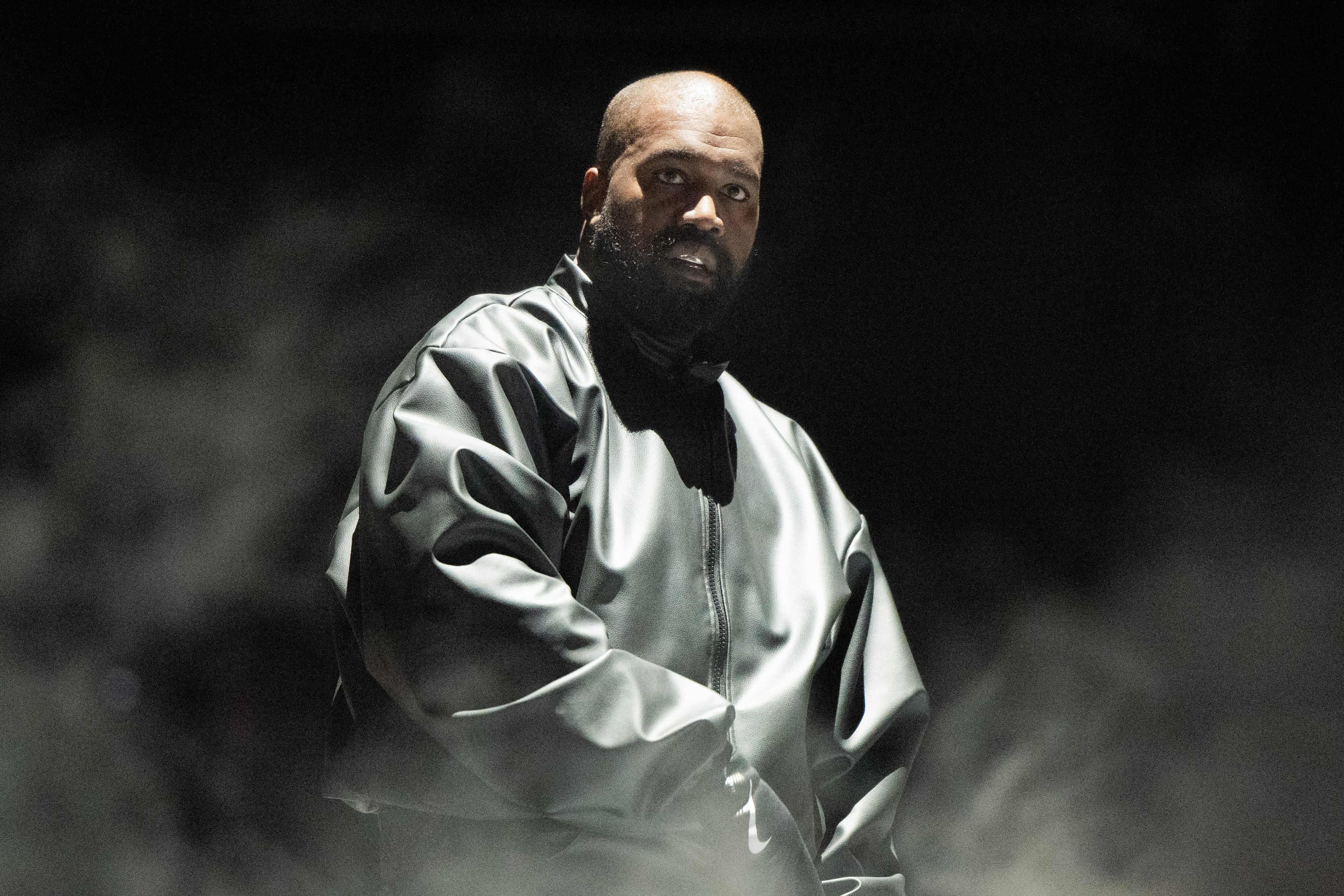

In today's interconnected world, information spreads like wildfire. But what happens when that information is toxic? We're talking about hate speech, folks. And the latest example causing outrage is a new song from Ye (formerly Kanye West), titled "Heil Hitler," along with a companion track "WW3," which has, shockingly, garnered millions of views on social media, particularly on Elon Musk's X. It's a stark reminder of the challenges platforms face in moderating content and the varying approaches they take. It begs the question: are we entering a new era of unchecked hate online?

The Song's Content: Glorifying the Unspeakable

The content of these songs is deeply disturbing. They glorify Adolf Hitler, the architect of the Holocaust, a man responsible for the systematic murder of millions. It's not just offensive; it's a blatant display of antisemitism and hate speech. This kind of rhetoric has no place in a civilized society, online or offline.

Platform Responses: A Patchwork of Moderation

While some platforms like Spotify and Soundcloud have taken steps to remove the songs, their efforts have been largely ineffective. The content continues to pop up across the internet like a persistent weed. This inconsistency in moderation raises serious questions about the responsibility of tech companies. Are they doing enough to combat hate speech?

X's Role: A Platform Under Scrutiny

Elon Musk's X, in particular, has come under intense scrutiny. Despite claims of combating hate speech, the song has racked up millions of views on the platform. This has led to accusations that X is failing to adequately moderate its content. It's a problem that goes far beyond just one song. Let's break this down further:

X's Content Moderation Policies: An Overview

X's content moderation policies have shifted considerably since Elon Musk's acquisition. The platform claims to prioritize free speech, but critics argue that this has come at the expense of effective hate speech moderation. The platform relies heavily on user reporting and automated systems, which many believe are insufficient.

Million Views and Counting: The Power of Virality

The fact that the "Heil Hitler" song has garnered millions of views on X is not just a numbers game; it's a demonstration of the potential for hate speech to spread rapidly online. The algorithms that drive social media platforms can amplify harmful content, making it even more difficult to contain. This raises concerns about the impact of this virality. What influence does this type of content have on its audience?

The Fractured Online Landscape: A Battle for Standards

The varying responses from different platforms highlight the fractured nature of the online landscape. Some platforms prioritize safety and inclusivity, while others lean towards a more hands-off approach. This creates a situation where hate speech can thrive on platforms with weaker moderation policies. Think of it like a game of whack-a-mole; you shut it down in one place, and it pops up in another.

The Impact of Relaxed Moderation: A Slippery Slope?

Some platforms have rolled back their moderation practices in recent years, arguing that they are promoting free speech. However, critics argue that this creates a breeding ground for hate speech and misinformation. Is there a point where "free speech" becomes "dangerous speech?" It’s a debate with no easy answers.

The Role of Algorithms: Amplifying the Problem

Algorithms play a significant role in what we see online. They are designed to show us content that we are likely to engage with, which can create echo chambers and amplify harmful content. If an algorithm is prioritizing engagement over safety, it can inadvertently contribute to the spread of hate speech.

Legal Implications: Where Does Responsibility Lie?

The legal implications of hosting hate speech on social media platforms are complex. In many countries, platforms are not legally responsible for the content that users post. However, there is growing pressure for platforms to take more responsibility for the content they host. Think of it like a landlord being responsible for the actions of their tenants.

The Fight Against Antisemitism: A Renewed Urgency

The rise of antisemitism online and offline is deeply concerning. The "Heil Hitler" song is just one example of a broader trend. We need to renew our commitment to fighting antisemitism and all forms of hate speech. This requires a multi-faceted approach, including education, awareness campaigns, and stricter content moderation policies.

Education as a Countermeasure

Education plays a pivotal role in combating hate speech. By teaching people about the history and dangers of antisemitism and other forms of hate, we can help to prevent the spread of harmful ideologies. Education can take many forms, from classroom instruction to online resources.

Community Action and Reporting Mechanisms

Empowering communities to report hate speech is essential. Platforms should make it easy for users to report content that violates their policies, and they should respond promptly to those reports. Community action can also involve organizing counter-protests and supporting organizations that fight hate.

The Importance of Holding Platforms Accountable

We need to hold social media platforms accountable for the content they host. This means demanding greater transparency and stricter moderation policies. It also means supporting legislation that would hold platforms liable for the spread of hate speech. After all, with great power comes great responsibility.

Moving Forward: A Call for Action

The spread of the "Heil Hitler" song is a wake-up call. We need to take action to combat hate speech online and offline. This requires a collective effort from tech companies, policymakers, educators, and individuals. Together, we can create a more inclusive and tolerant online environment.

Conclusion: A Future Free of Hate?

The controversy surrounding Ye's "Heil Hitler" song underscores the ongoing challenges of content moderation in the digital age. The fractured online landscape, varying platform policies, and the amplification of hate speech through algorithms all contribute to the problem. Ultimately, combating hate requires a multi-pronged approach involving education, community action, stricter platform accountability, and a renewed commitment to fighting antisemitism and all forms of discrimination.

Frequently Asked Questions

- Why is it difficult for platforms to remove hate speech entirely?

Hate speech is often cloaked in ambiguity and can be difficult to distinguish from protected speech. The sheer volume of content uploaded daily makes manual review impossible, and automated systems aren't perfect, leading to errors and inconsistencies.

- What role do algorithms play in the spread of hate speech?

Algorithms are designed to maximize engagement, often by showing users content they're likely to agree with or react to strongly. This can create echo chambers where hate speech is amplified and normalized, further spreading its reach.

- What can individuals do to combat hate speech online?

You can report hate speech when you see it, engage in respectful dialogue with those who hold different views, support organizations that fight hate, and educate yourself and others about the dangers of prejudice and discrimination.

- Are social media platforms legally responsible for the content users post?

The legal landscape is complex and varies by country. In many cases, platforms have legal protections that shield them from liability for user-generated content. However, there is growing pressure to reform these laws and hold platforms more accountable.

- How does the "free speech" argument factor into the debate about hate speech moderation?

Proponents of free speech argue that all ideas, even offensive ones, should be allowed to circulate freely. However, critics argue that hate speech can incite violence and discrimination and therefore should not be protected under the banner of free speech. Striking a balance between these competing values is a major challenge for policymakers and platform administrators.